.png)

“You can just do things” has been the mantra of late on certain social media platforms, and it perfectly ties into the rise of vibe coding. With the rise of Cursor and Claude Code paired with coding focused LLMs, AI has essentially democratized software development to a level never seen before.

High level languages have incrementally made it more accessible in the past, but vibe coding threw open the floodgates. The once impossible is now possible. Now, anyone can have a full-stack production application with paying users without much effort.

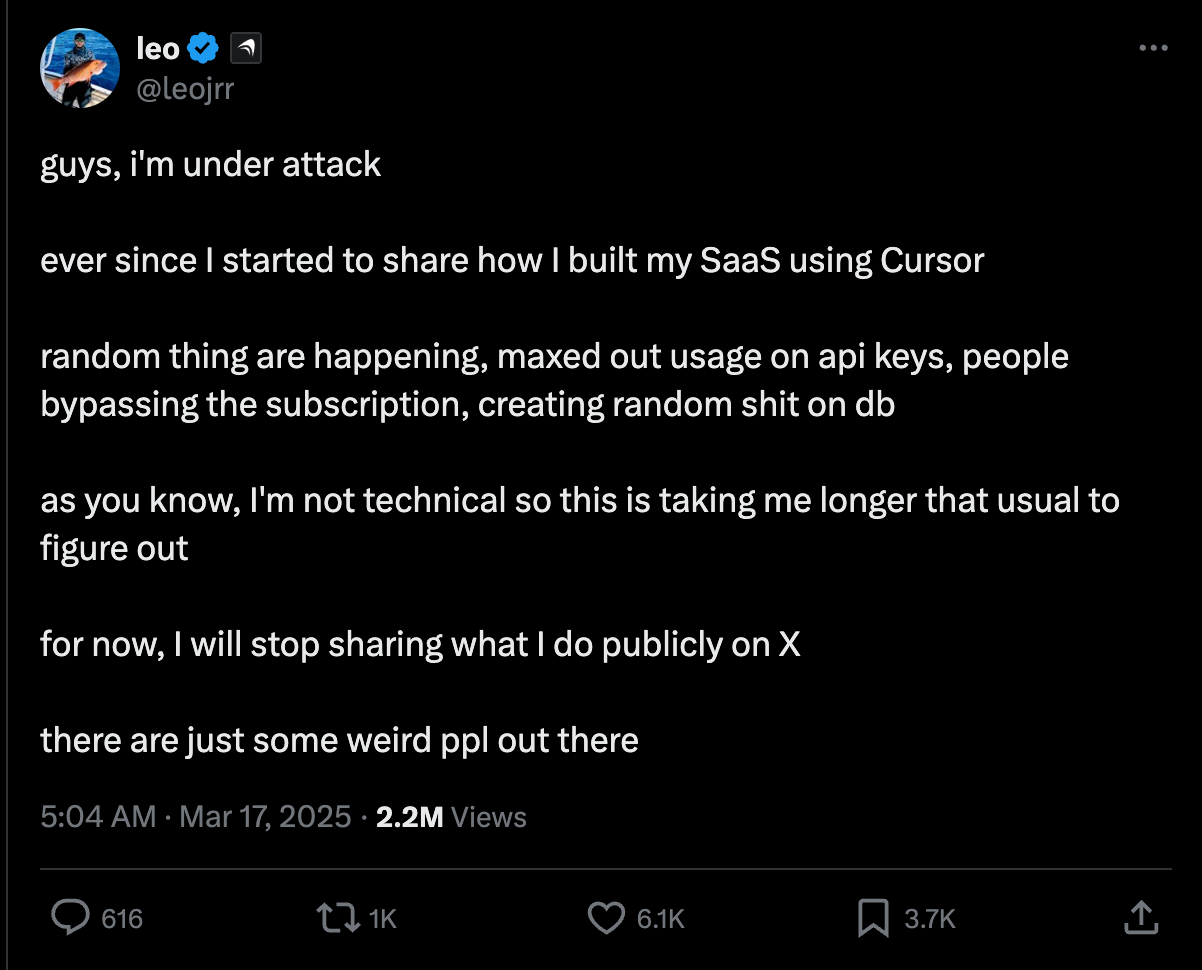

However, this doesn’t always go as planned, as seen in the following tweet:

While the "vibe" can foster rapid prototyping and breakthrough ideas, it often sidelines essential practices like security due diligence. For organizations using SaaS applications, this vibe-driven development can quickly translate into significant security risks.

What is Vibe Coding?

At its core, vibe coding is development guided by the immediate need for functionality, often leading to shortcuts and a relaxed approach to best practices. In the context of a modern SaaS-driven environment, this can manifest as:

- Quick-Fix Integration: Developers rapidly connecting new third-party apps or services to core business data without fully vetting their security posture or the scope of their requested permissions.

- Minimalist Configuration: Leaving default settings in place or opting for the quickest-to-implement configuration, bypassing granular security controls.

- Postponed Cleanup: Deferring the revocation of unused or overly permissive access tokens and credentials, treating it as a problem for "later."

- Informal Data Handling: Treating non-production or test data less rigorously, inadvertently using sensitive production data in development environments without proper masking or security.

The Core Security Risks of Vibe Coding

There are two key security risks associated with vibe coding, especially if being used at the corporate level:

Contextual Blindness

LLMs are optimized for functionality, not security. They prioritize the "happy path" (making the feature work) over the "adversarial path" (making the feature unhackable). If you don’t have software engineering experience, you may not know what security pitfalls to be aware of right away.

The "Agentic" Supply Chain

Modern agents can install their own dependencies. This introduces Package Hallucination (the AI suggests a library that doesn't exist, which an attacker then registers with a malicious payload)

The Biggest Security Red Flags

If you are vibe coding, your application likely suffers from one of the "Big Four" vibe-driven flaws:

1) Client-Side "Security"

AI often defaults to putting sensitive logic (like API keys or admin checks) in the frontend because it’s the fastest way to make a demo work.

2) Missing Input Validation

AI frequently misses the "boring" stuff like sanitizing user inputs, leading to classic SQL Injection and Cross-Site Scripting (XSS).

3) Over-Permissioning

To ensure a feature doesn't fail due to permissions, AI agents often generate "Allow All" CORS policies or grant Admin-level IAM roles to cloud functions.

4) Hardcoded Secrets

Agents may accidentally pull real keys from your local ‘.env’ files and bake them into the source code or commit them to history.

Prevention 101: How to Secure the Vibe

You don't have to stop vibe coding, but you do have to stop blindly vibe coding. Security in the age of AI requires a "Trust but Verify" architecture.

1) Good Prompt Hygiene (Security-First Prompting)

Don't just ask for a feature; define the security boundaries in your prompt.

- Bad: "Build a login form for my app."

- Good: "Build a secure login form using Auth0 for authentication. Use parameterized queries for the database, implement rate-limiting, and ensure no secrets are hardcoded in the frontend."

2) Implement an "AI Guardrail" Stack

Treat AI-generated code like it was written by a highly productive but reckless junior developer.

- Pre-commit Scanners: Use tools like git-secrets or TruffleHog to catch leaked keys before they leave your machine.

- Automated SAST: Integrate Static Analysis Security Testing (code analysis tools) into your workflow. If the "vibe" doesn't pass the scan, it doesn't get merged.

- Dependency Auditing: Always check package.json or requirements.txt after an agent adds a library. Verify the package is legitimate and hasn't been "hallucinated."

3) The "Human-in-the-Loop" Mandate

Never allow an agent to push directly to production.

- Line-by-Line Review: Even if you didn't write the code, you are responsible for it. Use the agent to explain the security implications of the code it just wrote. Ask it: "What are the potential attack vectors for this specific function?"

- Shadow Environments: Deploy vibe-coded features to a sandbox environment first to observe behavior before exposing them to real user data.

Conclusion

Vibe coding is an incredible tool for innovation and prototyping, but there is still a human and security element that needs to occur before the application is production ready. By treating AI as a co-pilot rather than an autopilot, we can reap the rewards of speed without falling into the trap of systemic insecurity. Vibe safely.